How to Efficiently Scale the Pods?

Microservices are great for handling the load because they allow for efficient scaling of pods. By breaking down an application into more minor independent services, microservices make it easier to manage the individual components and scale them up or down as needed. This means that if one service is experiencing high traffic, it can be scaled up without affecting the rest of the application.

Developers typically use Kubernetes to deploy microservices by managing them as orchestrated pods. In Kubernetes, there are two main methods to scale pods: vertical pod autoscaling (VPA) and horizontal pod autoscaling (HPA). When you read the documentation, everything looks straightforward. Add some CRD, define boundaries, and go. In practice, it is not as obvious as it seems. Moreover, it would be best to keep potential pitfalls in mind to achieve better results.

In this article, I will tell a story about automatically scaling PODs in production and why we created a custom VPA.

Goals

Let's start by discussing our goals. Our main objective is to eliminate the need for developers to define resources for their services. Typically, there is always a variance in the resources that an application consumes. For instance, developers might define that 1 CPU is sufficient for their service. However, with time, it could become inadequate, or it could have been excessive from the beginning. Therefore, we need to have a process to detect this variance and prompt developers to adjust their resource definition occasionally. Not very convenient.

Look at the picture below where an application is under the load. You can see that Grafana calculates the mean and peak CPU usage for us: 0.6 CPU and 6 CPU, respectively.

The main question here is, according to this data, where, as a developer, should I draw the line with CPU requests? Here?

Or here?

Ideally, we want to make sure that the requests and usage are closely aligned with each other. We shouldn't reserve a resource, say 4 CPUs for a particular pod, but instead end up using another resource, 1 CPU. This can result in an underloaded cluster running out of resources. Needs improvement.

On the other hand, if we reserve 4 CPUs but end up using a different resource, 8 CPUs, this can lead to unnecessary throttling, which we want to avoid.

Generally, we want to avoid the situation below where 25 percent of node resources are reserved for nothing:

We don't worry much about limits, especially regarding CPUs. Although we sell more than necessary, it should be fine as long as the actual usage stays close to the requests with a regular load. I will discuss how to handle peak loads later, but these generous limits can also help (although there are some limitations).

VPA

Vertical Pod Autoscaler is an excellent option to align POD’s resource definitions during the service lifecycle. Imagine that you can align the line from our example according to actual resource consumption. It mainly frees developers from manual tuning and aligns resource requests and actual usage.

Before we go further, let’s recall what parts VPA consists of:

When the recommender pod is restarted, or there is a cold start for pods, Recommender uses Prometheus-based data sources to evaluate recommendations. It then enriches this data with kube-metrics to provide more up-to-date recommendations.

The admission controller (which is the second part) applies recommendations when a pod is created. And finally, there is the updater that checks new recommendations provided and recreates affected pods.

We do not use the updater to avoid unpredictable behavior when using HPA in parallel. We discuss it later in the article.

Now, we are ready to adopt VPA. But let us do it right. Imagine we have the following configuration provided by a developer:

# values.yml

deployment:

resources:

requests:

memory: "512Mi" # minimum memory in POD's requests (but could be more)

cpu: "0.1" # minimum CPU in POD's requests (but could be more)

limits:

memory: "1Gi" # minimum memory in POD's limits (but could be more)

cpu: "2.0" # minimum CPU in POD's limits (but could be more)Let's consider these numbers as minimum values to align with developers' expectations. Of course, we can apply greater values in both cases when a pod is created. Here, we face the first problem with a standard VPA implementation. We can define the minimum values for requests using the CRD:

# VPA resource

resourcePolicy:

containerPolicies:

- containerName: frontend-app

minAllowed:

cpu: "0.1" # the same as defined requests

memory: 512Mi # the same as defined requestsUnfortunately, we cannot do it for limits. Yes, there is a LimitRange specification that VPA respects, but it could be applied to the whole namespace only.

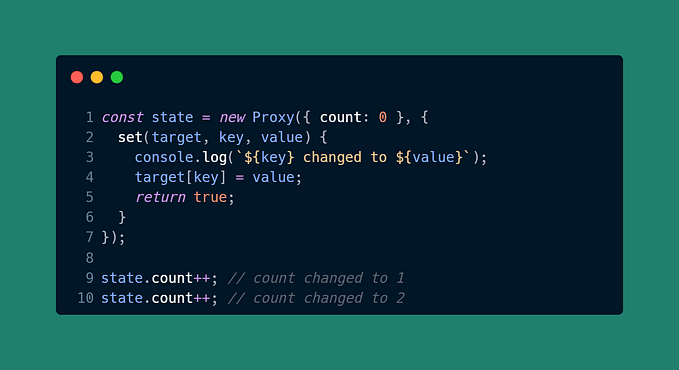

Currently, the only option is to fork VPA and create a custom implementation to calculate limits. Let me show you the example implementation for CPU:

func scaleCustomQuantityCPU(originalRequest, originalLimit, scaleResult *resource.Quantity, rounding roundingMode) (*resource.Quantity, bool) {

scaledOriginal := big.NewInt(scaleResult.MilliValue())

// make limit x2 of requests as a limit baseline

scaledOriginal.Mul(scaledOriginal, big.NewInt(2))

originalLimitMilli := big.NewInt(originalLimit.MilliValue())

// round up scaledOriginal to the nearest integer

if scaledOriginal.Int64()%1000 != 0 {

scaledOriginal.Div(scaledOriginal, big.NewInt(1000))

scaledOriginal.Add(scaledOriginal, big.NewInt(1))

scaledOriginal.Mul(scaledOriginal, big.NewInt(1000))

}

// choose scaled or original limit, whichever is larger

if scaledOriginal.Cmp(originalLimitMilli) < 0 {

scaledOriginal = originalLimitMilli

}

... //round to units

return resource.NewMilliQuantity(math.MaxInt64, originalLimit.Format), true

}We do not consider the ratio between limits and requests compared to the original implementation because developers should keep it in mind otherwise. We don't want to increase cognitive load, so we use the constant (x2) here based on our knowledge.

Next, we round up the CPU limit to the nearest integer number. The idea here is to use integer values for CPU limits to avoid throttling by CFS if the limit is less than 1 CPU. If we calculate 0.5 CPU, then we will expand it to 1.0.

Finally, we compare the calculated value to the value defined by limits and choose the greater one.

The exact implementation could be used for memory as well.

More VPA challenges

We faced two more significant challenges with VPA adoption: using VPA with release candidates and fetching appropriate metrics using one Prometheus storage for multiple environments like dev/prod.

We use release candidates to test new versions of software in production. As shown in the picture below, we can route the traffic to the release candidate using a special cookie or header.

Regarding K8s, the release candidate is just a parallel deployment with its own service, which we can use to route the traffic. The issue with this approach is that VPA is related to a container. So, we have two options:

- Use the same container name for the stable and the RC. In this case, we can apply recommendations. However, doing so can affect the statistics, which may lead to non-optimal recommendations.

- Use the different container names. In this case, we may need historical data leading to non-optimal recommendations.

Finally, we decided to patch the recommender to use the stable version recommendations for release candidates:

var resources logic.RecommendedPodResources

hasRecommendation := false

if strings.HasSuffix(observedVpa.Name, "-rc") {

klog.V(4).Infof("Release Candidate VPA %s: get recommendations from primary release", observedVpa.Name)

primaryVPAName := strings.TrimSuffix(observedVpa.Name, "-rc")

primaryReleaseKey := model.VpaID{

Namespace: observedVpa.Namespace,

VpaName: primaryVPAName,

}

vpaPrimary, foundPrimary := r.clusterState.Vpas[primaryReleaseKey]

if !foundPrimary {

klog.V(4).Infof("elease Candidate VPA %s: do not find primary VPA", observedVpa.Name)

continue

}

resources = r.podResourceRecommender.GetRecommendedPodResources(GetContainerNameToAggregateStateMap(vpaPrimary))

hasRecommendation = vpaPrimary.HasRecommendation()

// patch container name

resources[observedVpa.Name] = resources[primaryVPAName]

delete(resources, primaryVPAName)

klog.V(4).Infof("Release Candidate VPA %s has recommendations: %+v", observedVpa.Name, resources)

} else {

resources = r.podResourceRecommender.GetRecommendedPodResources(GetContainerNameToAggregateStateMap(vpa))

hasRecommendation = vpa.HasRecommendation()

klog.V(4).Infof("VPA %s has recommendations: %+v", observedVpa.Name, resources)

}It solves the issue with release candidates, and we have one more in the pocket — one Prometheus source for all environments. There is no general way to add your filters in the PromQL query, so we patched the recommender again by adding filtering by env label.

podSelector = fmt.Sprintf("%s, %s=\"%s\"", podSelector, "env", os.Getenv("K8S_ENVIRONMENT"))VPA: final steps

We currently have a functional solution that reduces developers' need to adjust resources manually. However, we should consider adding more constraints to ensure our automatic recommendations do not adversely affect the overall system.

It is important to define not only the minimum amount of resources required but also the maximum amount that can be allocated:

# VPA resource

resourcePolicy:

containerPolicies:

- containerName: frontend-app

maxAllowed:

cpu: "4.0" # not more than 4 CPU

memory: "8Gi" # not more than 8Gi

minAllowed:

cpu: "0.1"

memory: 512MiThe application can still consume more resources on the peak loads. However, we should go to the next chapter to make our system robust.

HPA

Let’s look at the graph below one more time:

To survive the load, we can define the enormous limits for the application with the load pattern above, but we need to ensure that we have enough resources and that it will not affect neighbor pods.

Typically, an application - especially a real-time one that handles user requests - tends to use more CPU and memory as the number of requests increases. Although other factors may contribute to this behavior, we will focus on this particular one for now to keep things simpler.

To reduce resource consumption, we can horizontally scale by adding more service instances. The option is provided by another K8s component: Horizontal Pod Autoscaler (HPA).

It's important to note that horizontal scaling helps distribute the load across individual pods, which can reduce average numbers. This is why using VPA and HPA simultaneously is generally not recommended, especially when the Updater is enabled and horizontal scaling is done based on CPU/memory.

Using HPA based on the average request count (or RPS per pod) is safer. Unfortunately, Kubernetes does not have embedded support for this metric, so we should do several things:

- Collect the metric for all services in Prometheus. The easiest way here is to use a service mesh, like Linkerd or Istio.

- Then we must install Custom Metrics Server: https://github.com/kubernetes-sigs/custom-metrics-apiserver.

- And define custom rules to make the metric available in HPA. For instance, the working example for Istio:

rules:

custom:

- seriesQuery: '{__name__=~"^istio_requests_total$", env="prod", destination_workload!="unknown|", source_app!~"unknown|", namespace!="", reporter="destination"}'

resources:

overrides:

namespace: {resource: "namespace"}

pod: {resource: "pod"}

name:

matches: "^(.*)_total"

as: "${1}_per_second"

metricsQuery: 'sum(rate(<<.Series>>{<<.LabelMatchers>>}[2m])) by (<<.GroupBy>>)'You can find more information in the following article: https://caiolombello.medium.com/kubernetes-hpa-custom-metrics-for-effective-cpu-memory-scaling-23526bba9b4.

HPA: configuration

After we define and make the metric available, we need to provide some reference for developers and template HPA for every Helm release:

# values.yml

deployment:

replicas: 3 # min replicas count for HPA

hpa:

enabled: true # enable HPA or not

multiplier: 2 # multiplier (maxReplicas = replicas * multiplier)

exactMaxReplicas: 6 # override multiplier (maxReplicas = exactMaxReplicas)

avgRequestsPerSecond: 50 # RPS per podIn the example above, we predict that the application's peak load is around 300 RPS in this case. And we can generate HPA based on the configuration as follows:

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: {{ .Values.service.name | quote }}

spec:

maxReplicas: {{ $maxReplicas }}

minReplicas: {{ $replicaCount }}

scaleTargetRef:

apiVersion: argoproj.io/v1alpha1

kind: Rollout

name: {{ .Values.service.name | quote }}

behavior:

scaleDown:

stabilizationWindowSeconds: 120 # we reduced it a bit (default is 300)

metrics:

- type: Pods

pods:

metric:

name: istio_requests_per_second

target:

type: AverageValue

averageValue: {{ .Values.deployment.hpa.avgRequestsPerSecond }}You can mention that developers should tune the HPA configuration, and we did not address the issue of automatic adjustment here. This is something we plan to work on in the future.

Scaling: further steps

Ok, we solve the aims:

- Our actual usage became very similar to requests. So, we utilize our clusters effectively.

- Developers may not adjust resources regularly. Of course, there could be some exceptions, and defining resources carefully for some services is necessary. By the way, the number of cases is very, very limited.

- We can survive peak loads because we can scale horizontally and have room in resources because of limits.

Behind the scenes, we also use node autoscaling to be sure we can spread the pods across different nodes and have enough resources.

And, as I said, we have plans to automate HPA adjustment. The base idea we want to try is to measure the RPS during component performance testing with bounded requests and limits we can get from the recommender. I hope we can do it and deliver results next time.